Ready to master Python web scraping? It is a powerful means of collecting data, such as the price of products or market trends. IP blocks and JavaScript can trip you up. Proxying.io’s proxies allow your web scraping to be unblocked and smooth.

This guide walks you through building a simple scraper from setup to saving data.

Ready? Let’s Dive in.

What you will Learn

- Set up Python for web scraping.

- Use libraries like Requests, Beautiful Soup, and Pandas.

- Find HTML elements with Developer Tools.

- Save a file as an Excel or CSV file.

- Avoid troubles with Proxying.io options.

This web scraping tutorial runs on any operating system, with some trivial adjustments. Let’s get started

Pre-requisities

You require a Python 3.4+ version. It is available on python.org. On Windows, check “Add Python to PATH” during install for easy pip access. Missed it? Rerun the installer and select “Modify” to add it.

For demonstration purposes, we’ll scrape Reddit.

Remember: This is a sample website. Replace the website you want to scrape with your own.

Python Web Scraping Libraries

Python’s libraries make web scraping easy. Here’s what we’ll use:

- Requests: Make a simple HTTP request to fetch web pages. Excellent in the case of static pages

- Beautiful Soup: Processes HTML to get information.

- Selenium: Copes with heavy websites, JavaScript sites, as a result of browser automation.

- Pandas: Writes the data in CSV or Excel, which is scraped.

Install them

pip install requests beautifulsoup4 selenium pandas pyarrow openpyxlProxies are key for web scraping. Proxying.io’s residential proxies rotate IPs to avoid bans, ensuring smooth data collection. You’ll see a confirmation in your terminal after installing the libraries.

Coding Environment

Choose a coding Tool. Any IDE or Jupyter Notebook is an alternative to PyCharm, but PyCharm is beginner-friendly. In any IDE, use New> File to create a file (e.g., scraper.py). This prepares your web scraping project in Python.

from selenium import webdriver

from selenium.webdriver import ChromeOptions

options = ChromeOptions()

driver = webdriver.Chrome(options=options)Browser Setup

Selenium needs a browser such as Firefox or Chrome. To make debugging convenient, start with a visible browser. Later, use headless mode for speed, Selenium 4.6+ auto-manages WebDrivers, but match your browser version. Set up Chrome:

This preps your browser for web scraping.

Targeting Website

We’ll scrape post titles and upvotes from

https://old.reddit.com/r/technology/.

The old Reddit layout is less JavaScript-heavy, making web scraping easier. Check

https://old.reddit.com/robots.txt

to confirm scraping is allowed for public data. Load the URL:

driver.get('https://old.reddit.com/r/technology/')This sets up your web scraping target.

Remember: This is a sample website. Replace the website you want to scrape with your own

Importing libraries

These are the libraries you must import for web scraping in python.

- Requests

- Beautiful Soup

- lxml

- Selenium

- Scrapy

Start your script

import pandas as pd

from bs4 import BeautifulSoup

from selenium import webdriver

from selenium.webdriver import ChromeOptions

import requestsThese libraries drive your web scraping tool.

Extracting Data

To scrape data from any website, use Developer Tools(F12) to inspect HTML. Post Titles are in <a> tags with class title inside <div class=”thing”>. Upvotes are in <dev class=”score unvoted”>.

Here is the code:

results = []

upvotes = []

content = driver.page_source

soup = BeautifulSoup(content, 'html.parser')

for post in soup.find_all(attrs={'class': 'thing'}):

title = post.find('a', attrs={'class': 'title'})

score = post.find('div', attrs={'class': 'score unvoted'})

if title and score:

results.append(title.text.strip())

upvotes.append(score.text.strip())This extracts titles and upvotes for your web scraping project.

Saving data

Save your data into file using Pandas.

For CSV

df = pd.DataFrame({'Post Titles': results, 'Upvotes': upvotes})

df.to_csv('reddit_posts.csv', index=False, encoding='utf-8')For Excel

Pandas library features a function to export data in an Excel file, but it requires you to install openpyxl library, which you can do in your terminal by following the command.

pip install openpyxlNow, let’s see how we can scrape data into an Excel file.

df.to_excel('reddit_posts.xlsx', index=False)Close the browser.

driver.quit()Proxies

Websites may block scrapers by over-requesting. To stay anonymous while web scraping, use proxies to rotate IPs. Whether using Proxying.io proxies or another provider, you will need key details:

- Proxy server address

- Port

- Username

- Password

For proxies with Requests, use this format:

proxies = {

'http': 'http://USERNAME:[email protected]:7777',

'https': 'http://USERNAME:[email protected]:7777'

}

response = requests.get('https://old.reddit.com/r/technology/', proxies=proxies)

soup = BeautifulSoup(response.text, 'html.parser')If you have other proxies, adapt this format:

http://USER:PASS@proxy.provider.com:portFor Selenium, we offer easy integration. These ensure reliable web scraping.

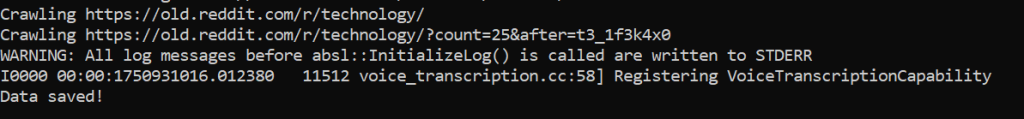

Full Python Web Scraping Code

Here’s a script to scrape pages, integrating proxies with Selenium.

import pandas as pd

from bs4 import BeautifulSoup

from selenium import webdriver

from selenium.webdriver import ChromeOptions

import time

# Set up proxy with Selenium

options = ChromeOptions()

options.add_argument('--headless=new')

options.add_argument('--proxy-server=http://USERNAME:[email protected]:7777')

driver = webdriver.Chrome(options=options)

pages = ['https://old.reddit.com/r/technology/', 'https://old.reddit.com/r/technology/?count=25&after=t3_1f3k4x0']

results = []

upvotes = []

for page in pages:

print(f'Crawling {page}')

driver.get(page)

time.sleep(2) # Avoid bans

soup = BeautifulSoup(driver.page_source, 'html.parser')

for post in soup.find_all(attrs={'class': 'thing'}):

title = post.find('a', attrs={'class': 'title'})

score = post.find('div', attrs={'class': 'score unvoted'})

if title and score:

results.append(title.text.strip())

upvotes.append(score.text.strip())

driver.quit()

df = pd.DataFrame({'Post Titles': results, 'Upvotes': upvotes})

df.to_csv('reddit_posts.csv', index=False, encoding='utf-8')

print('Data saved!')

The output should look like:

Pro Tips

Enhance your web scraping:

- Handle Errors:

try:

response = requests.get(page, proxies=proxies)

response.raise_for_status()

except requests.RequestException as e:print(f”Error: {e}”)

- Avoid Bans: To avoid bans, please add delays:

import time

time.sleep(2)- Set User-Agent:

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) Chrome/91.0'}

response = requests.get(page, headers=headers, proxies=proxies)- JavaScript Sites: Use Selenium for websites’ dynamic content.

- Scale Up: Try aiohttp for async web scraping:

import aiohttp

async with aiohttp.ClientSession() as session:

async with session.get(page) as response:

text = await response.text()- Stay Ethical: Scrape public data, respect robots.txt.

Data Cleaning and Normalization (Optional)

Scraped Reddit data is often messy, so cleaning is essential; remove duplicates, fix errors, and filter noise. Normalization helps by standardizing formats, like converting upvotes to integers or tidying post titles. This makes the data ready for accurate analysis or modeling.

results = [title.strip() for title in set(results) if title] # Remove duplicates

upvotes = [int(score) if score.isdigit() else 0 for score in upvotes] # Normalize to intThis ensures your web scraping data is analysis-ready.

Web Scraping Use Cases

- Market Research: Use Python to scrape r/technology and spot the latest trends before they go mainstream.

- Sentiment Analysis: Collect user comments to understand public opinion on different products or news.

- Content Creation: Find hot and trending topics to create content your audience cares about.

- Data Science: Build your own AI-ready datasets by scraping and organizing real-world Reddit Data.

Proxies Are Essential

Web scraping is something that requires proxies at scale. You can be halted by IP bans or CAPTCHAs. The proxies of Proxying.io are IP rotating so that they can scrape worldwide, in a global way, such as subreddits in certain regions. Other proxies do too, but our web scraper AI makes proxies, rendering, and CAPTCHAs simple.

Common Mistakes

Avoid these mistakes in web scraping

- Skipping error handling.

- Missing JavaScript content (use Selenium).

- Rapid requests without delays.

- Wrong Selectors (check Developer Tools).

- Scraping without proxies, risking bans.

Troubleshooting

- Empty results: If you are getting an empty list or result, please verify selectors in Developer Tools.

- Uneven Lists: You can use the “zip” file type to avoid this

df = pd.DataFrame(list(zip(results, upvotes)), columns=['Post Titles', 'Upvotes'])- Selenium Issues: Please check your browser and driver compatibility.

Conclusion

Nice work building your Python web scraping tool. You’re ready to grab data like a pro, and Proxying.io’s proxies keep you unblocked for global scraping. Try our Web Scraper API for an easier route, or keep tweaking your script.