Web scraping is a powerful technique for collecting data from websites, whether for market research, competitor analysis, or trend monitoring. Python is widely used for web scraping, and among many tools it offers, Scrapy is one of the most powerful and scalable frameworks available. If you encounter errors in Python, refer to the guide for solutions.

Let’s see what Scrapy is, why it is ideal for large-scale projects, and how you can build your first spider step-by-step using Scrapy in Python.

What is Scrapy?

Scrapy is an open-source web scraping framework written in Python. It was built specifically for extracting structured data from websites and processing it for a variety of use cases, such as saving it to a database, exporting to CSV/JSON, or feeding it into machine learning pipelines.

Whether you’re gathering job ads or scraping product data from Amazon, Scrapy makes it efficient.

Unlike basic scraping tools like BeautifulSoup or Requests, Scrapy engine allows:

- Asynchronous requests for faster scraping

- Built-in handling of redirects and cookies

- Modular spiders for code reusability

- Middleware support for proxies and user agents

It is more than a library; it is a full framework designed for performance and scalability.

Installing Scrapy

Before you dive into coding, install Scrapy using pip:

pip install scrapyIt’s recommended to use a virtual environment to avoid conflicts with other Python packages.

Creating a Scrapy Project

Scrapy organizes code using a project structure. You can start a new Scrapy project by running:

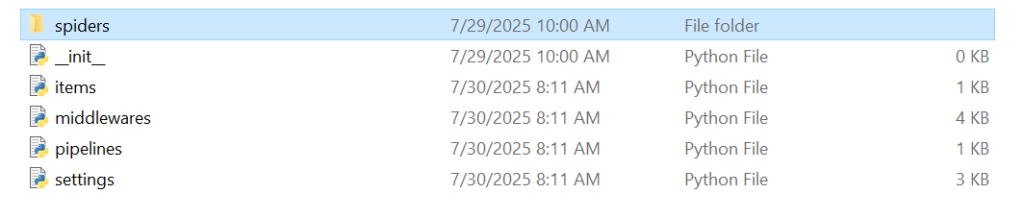

scrapy startproject scrapy_projectThis command creates a directory with the following structure:

The spiders/ folder is where you will write your scraping code.

Writing Your First Spider

Spiders are classes that define how a certain site should be scraped. Let’s build a simple spider that scrapes book titles from books.toscrape.com.

Navigate to the spiders folder and create a file called book_spider.py:

import scrapy

class BooksSpider(scrapy.Spider):

name = "books"

start_urls = ['http://books.toscrape.com']

def parse(self, response):

for book in response.css('article.product_pod'):

yield {

'title': book.css('h3 a::attr(title)').get(),

'price': book.css('p.price_color::text').get(),

}

next_page = response.css('li.next a::attr(href)').get()

if next_page:

yield response.follow(next_page, self.parse)This spider:

- Starts on the homepage

- Extracts book titles and prices using CSS selectors

- Follows pagination links to scrape all pages

To run the spider, execute:

scrapy crawl booksScrapy will handle requests asynchronously and output the results in the terminal.

Exporting Data

To export the scraped data to a file, use the -o flag

scrapy crawl books -o books.jsonYou can change the format to CSV or ML as needed:

scrapy crawl books -o books.csvThis makes it easy to integrate your scraped data with other tools or analytics platforms.

Configuring Settings

Scrapy gives you control over how your spider behaves through the settings.py file. Here, you can set things like:

- User agent strings

- Request delays

- Retry logic

- Proxy configurations

- Pipeline options

For instance, to avoid overloading the target site, you can introduce a delay:

DOWNLOAD_DELAY = 2To simulate a real browser and reduce the chance of blocking:

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64)'Using Scrapy Pipelines

Scrapy’s pipeline system allows you to process scraped items, such as cleaning data, validating entries, or saving to databases.

In pipelines.py, define your custom processing logic:

class CleanDataPipeline:

def process_item(self, item, spider):

item['title'] = item['title'].strip()

return item

Then activate it in settings.py:

ITEM_PIPELINES = {

'scrapy_project.pipelines.CleanDataPipeline': 300,

}Using Middleware and Proxies

Websites often block scraping bots. To bypass this, you can use residential or data center proxies at Proxying.io or user agents with Scrapy middleware.

Here’s an example middleware for rotating user agents:

import random

class RandomUserAgentMiddleware:

user_agents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64)',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7)',

'Mozilla/5.0 (X11; Linux x86_64)',

]

def process_request(self, request, spider):

request.headers['User-Agent'] = random.choice(self.user_agents)To enable it, modify the DOWNLOADER_MIDDLEWARES settings

You can also integrate third-party proxy services for more reliability, especially when scraping at scale.

Why Use Scrapy Over Other Tools?

If you’re new to web scraping, you might ask, Why use Scrapy when libraries like Requests or BeautifulSoup exist?

Here’s why:

| Feature | Scrapy | BeautifulSoup + Requests |

| Async Support | Built-in | Manual setup needed |

| Speed | Very fast | Slower |

| Crawling support | Built-in | Manual logic needed |

| Item pipelines | Modular | Requires custom code |

| Scalability | Excellent | Limited |

For small scripts, BeautifulSoup might be fine. But for large projects with thousands of pages, Scrapy is the clear winner.

Final Thoughts

Whether you’re scraping eCommerce data, job listings, or social media content, Scrapy in Python, offers a robust and flexible framework to get the job done efficiently. It has a modular architecture, built-in support for concurrency, and powerful extensibility.